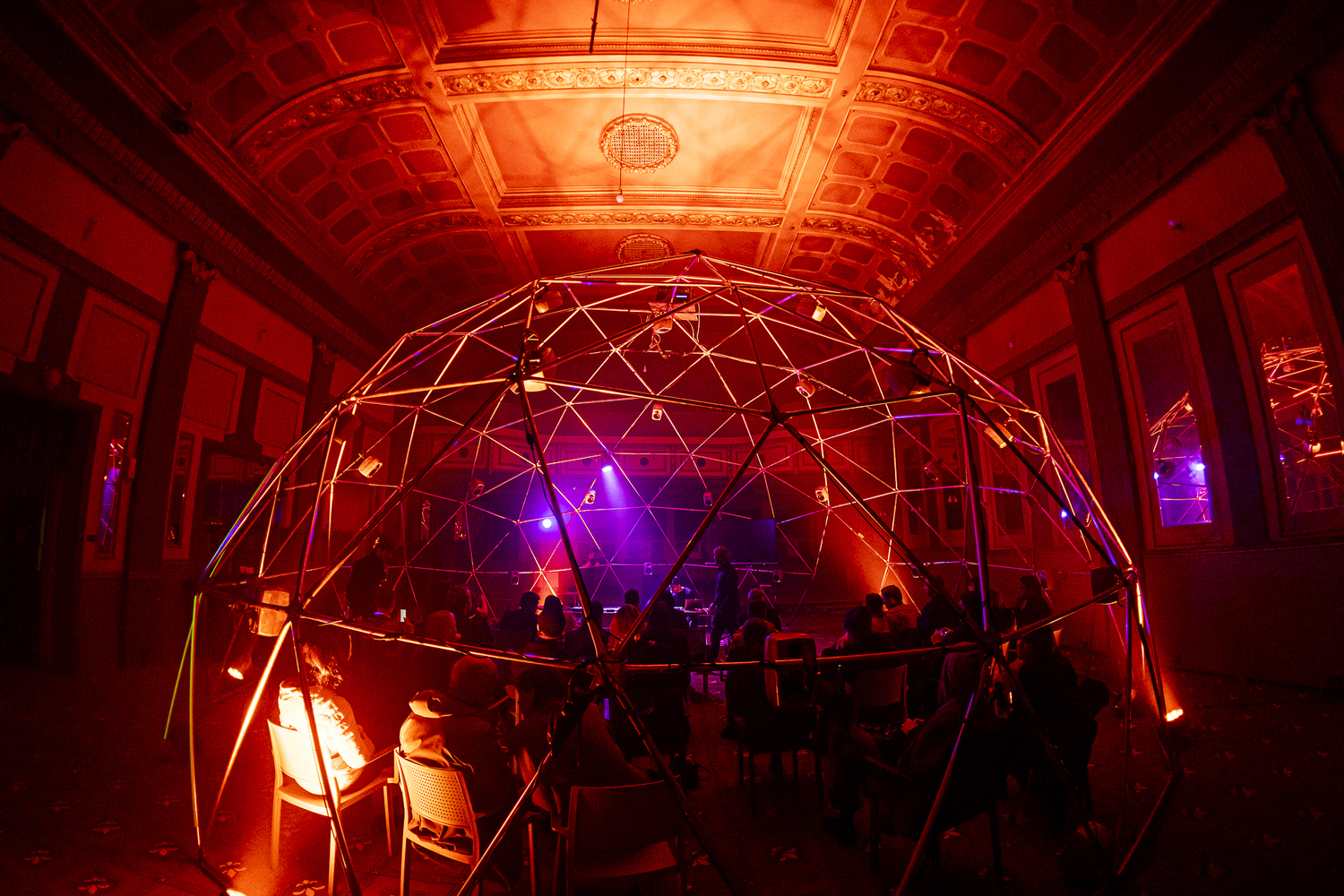

Riga, 2023

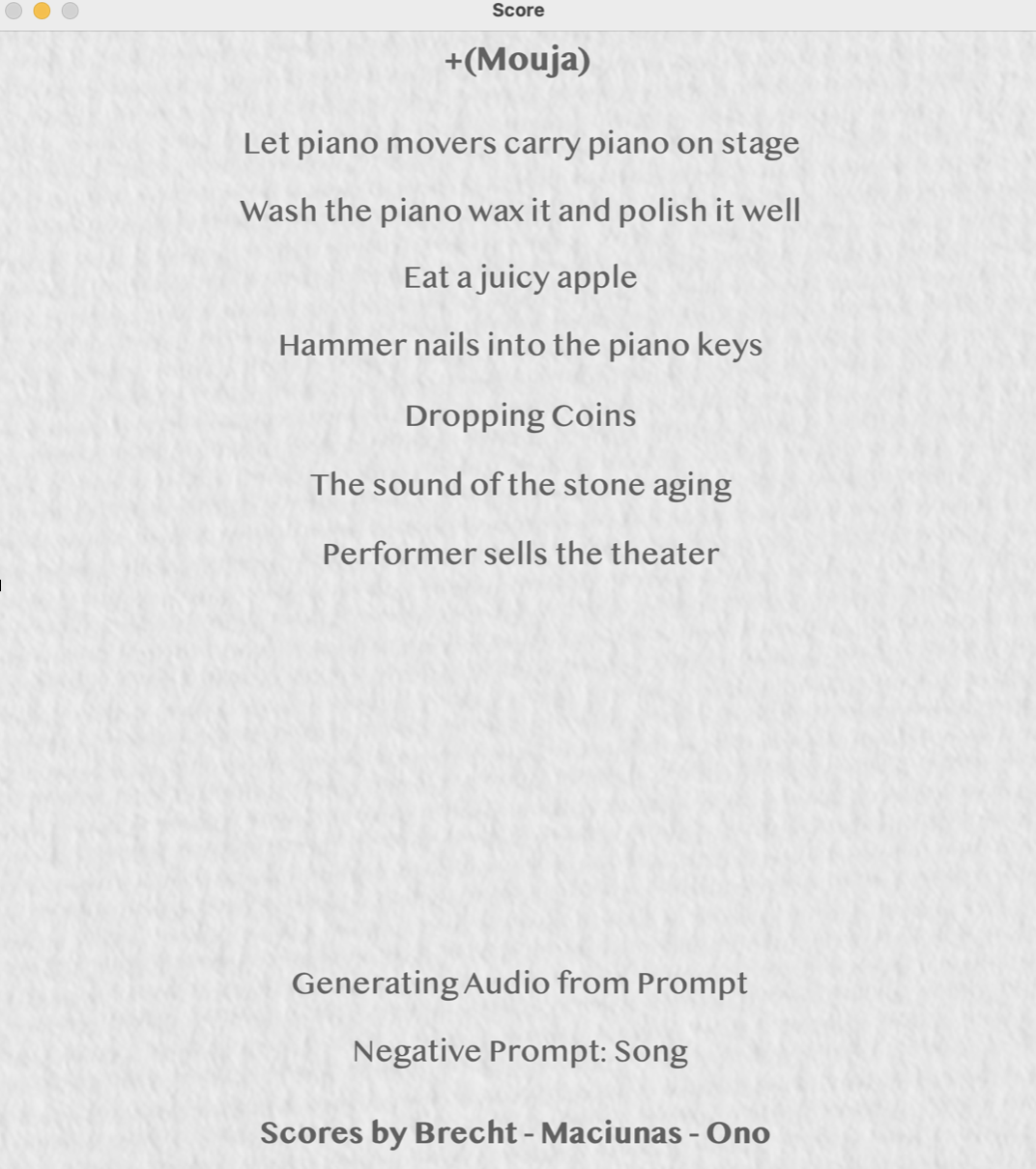

Mouja+ combines real-time neural audio synthesis using my interfaces Thales and Stacco with a text-to-audio model (Stable Audio), prompted on stage with text scores from Fluxus artists. The text scores are used to create sonic textures that I dynamically layer with each other and use as inputs for timbre transfer with neural synthesis models. For this piece, I designed a DAW bridging my local machine with a remote server to create in real time my audio sample from text prompted live.

The scores are displayed for the audience, and along with the dynamic spatialisation of the sound sources, contribute to the sense of eeriness that permeates the performance, to the apparent absences of all the subjects and actors that are conjured on stage.